# MongoDB 和 GridFS 用于内部和内部数据中心数据复制

> 原文: [http://highscalability.com/blog/2013/1/14/mongodb-and-gridfs-for-inter-and-intra-datacenter-data-repli.html](http://highscalability.com/blog/2013/1/14/mongodb-and-gridfs-for-inter-and-intra-datacenter-data-repli.html)

*这是 LogicMonitor 副总裁 [Jeff Behl](jbehl@logicmonitor.com) 的来宾帖子。 [在过去 20 年中,Jeff](@jeffbehl) 有点过时,他为许多基于 SaaS 的公司设计和监督基础架构。*

## 用于灾难恢复的数据复制

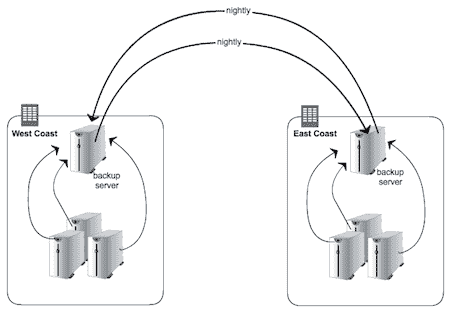

灾难恢复计划的必然部分是确保客户数据存在于多个位置。 对于 LogicMonitor,这是一个基于 SaaS 的物理,虚拟和云环境的监视解决方案,我们希望在数据中心内外都可以复制客户数据文件。 前者用于防止设施中的单个服务器丢失,而后者用于在数据中心完全丢失的情况下进行恢复。

## 我们在哪里:Rsync

像大多数在 Linux 环境下工作的人一样,我们使用了可信赖的朋友 rsync 来复制数据。

Rsync is tried, true and tested, and works well when the number of servers, the amount of data, and the number of files is not horrendous. When any of these are no longer the case, situations arise, and when the number of rsync jobs needed increases to more than a handful, one is inevitably faced with a number of issues:

* 备份作业重叠

* 备份作业时间增加

* 过多的同时作业使服务器或网络超载

* 完成 rsync 作业后,没有简单的方法来协调所需的其他步骤

* 没有简便的方法来监视作业计数,作业统计信息并在发生故障时得到警报

Here at LogicMonitor [our philosophy](http://blog.logicmonitor.com/2012/07/17/our-philosophy-of-monitoring/) and reason for being is rooted in the belief that everything in your infrastructure needs to be monitored, so the inability to easily monitor the status of rsync jobs was particularly vexing (and no, we do not believe that emailing job status is monitoring!). We needed to get better statistics and alerting, both in order to keep track of backup jobs, but also to be able to put some logic into the jobs themselves to prevent issues like too many running simultaneously.The obvious solution was to store this information into a database. A database repository for backup job metadata, where jobs themselves can report their status, and where other backup components can get information in order to coordinate tasks such as removing old jobs, was clearly needed. It would also enable us to monitor backup job status via simple queries for information such as the number of jobs running (total, and on a per-server basis), the time since the last backup, the size of the backup jobs, etc., etc.

## MongoDB 作为备份作业元数据存储

The type of backup job statistics was more than likely going to evolve over time, so MongoDB came to light with its “[schemaless](http://blog.mongodb.org/post/119945109/why-schemaless)” document store design. It seemed the perfect fit: easy to setup, easy to query, schemaless, and a simple JSON style structure for storing job information. As an added bonus, MongoDB replication is excellent: it is robust and extremely easy to implement and maintain. Compared to MySQL, adding members to a MongoDB replica set is auto-magic.So the first idea was to keep using rsync, but track the status of jobs in MongoDB. But it was a kludge to have to wrap all sorts of reporting and querying logic in scripts surrounding rsync. The backup job metainfo and the actual backed up files were still separate and decoupled, with the metadata in MongoDB and the backed up files residing on a disk on some system (not necessarily the same). How nice it would be if the the data and the database were combined. If I could query for a specific backup job, then use the same query language again for an actual backed up file and get it. If restoring data files was just a simple query away... [Enter GridFS](http://docs.mongodb.org/manual/applications/gridfs/).

## 为什么选择 GridFS

You can read up on the details GridFS on the MongoDB site, but suffice it to say it is a simple file system overlay on top of MongoDB (files are simply chunked up and stored in the same manner that all documents are). Instead of having scripts surround rsync, our backup scripts store the data and the metadata at the same time and into the same place, so everything is easily queried.

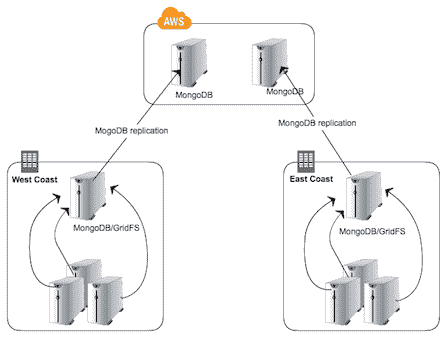

当然,MongoDB 复制可与 GridFS 一起使用,这意味着备份的文件可立即在数据中心内和异地复制。 通过 Amazon EC2 内部的副本,可以拍摄快照以保留所需的尽可能多的历史备份。 现在,我们的设置如下所示:

优点

* 作业状态信息可通过简单查询获得

* 备份作业本身(包括文件)可以通过查询检索和删除

* 复制到异地位置实际上是立即的

* 分片可能

* 借助 EBS 卷,通过快照进行 MongoDB 备份(元数据和实际备份数据)既简单又无限

* 自动化状态监控很容易

## 通过 LogicMonitor 进行监控

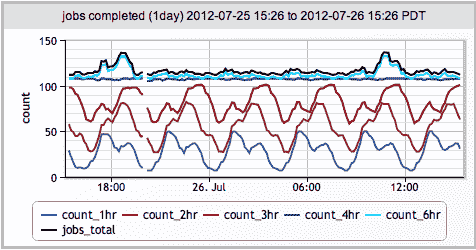

LogicMonitor 认为,从物理级别到应用程序级别的基础架构的所有方面都应位于同一监视系统中:UPS,机箱温度,OS 统计信息,数据库统计信息,负载平衡器,缓存层,JMX 统计信息,磁盘 写入延迟等)。所有都应该存在,其中包括备份。 为此,LogicMonitor 不仅可以监视 MongoDB 的常规统计信息和运行状况,还可以对 MongoDB 执行任意查询。 这些查询可以查询任何内容,从登录静态信息到页面视图,再到最后一个小时内完成的(猜测是什么?)备份作业。

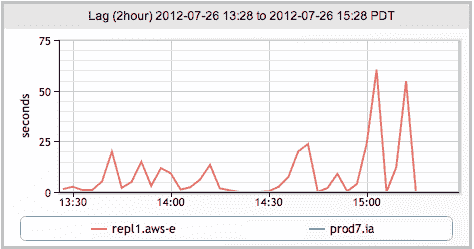

Now that our backups are all done via MongoDB, I can keep track of (and more importantly, be alerted on):

* 每台服务器运行的备份作业数

* 所有服务器之间同时执行的备份数

* 任何未备份超过 6 个小时的客户门户

* MongoDB 复制滞后

### 复制滞后

### 工作完成

您是否正在使用保险丝访问 GridFS,或者是否正在根据 API 编写所有代码?

- LiveJournal 体系结构

- mixi.jp 体系结构

- 友谊建筑

- FeedBurner 体系结构

- GoogleTalk 架构

- ThemBid 架构

- 使用 Amazon 服务以 100 美元的价格构建无限可扩展的基础架构

- TypePad 建筑

- 维基媒体架构

- Joost 网络架构

- 亚马逊建筑

- Fotolog 扩展成功的秘诀

- 普恩斯的教训-早期

- 论文:Wikipedia 的站点内部,配置,代码示例和管理问题

- 扩大早期创业规模

- Feedblendr 架构-使用 EC2 进行扩展

- Slashdot Architecture-互联网的老人如何学会扩展

- Flickr 架构

- Tailrank 架构-了解如何在整个徽标范围内跟踪模因

- Ruby on Rails 如何在 550k 网页浏览中幸存

- Mailinator 架构

- Rackspace 现在如何使用 MapReduce 和 Hadoop 查询 TB 的数据

- Yandex 架构

- YouTube 架构

- Skype 计划 PostgreSQL 扩展到 10 亿用户

- 易趣建筑

- FaceStat 的祸根与智慧赢得了胜利

- Flickr 的联合会:每天进行数十亿次查询

- EVE 在线架构

- Notify.me 体系结构-同步性

- Google 架构

- 第二人生架构-网格

- MySpace 体系结构

- 扩展 Digg 和其他 Web 应用程序

- Digg 建筑

- 在 Amazon EC2 中部署大规模基础架构的六个经验教训

- Wolfram | Alpha 建筑

- 为什么 Facebook,Digg 和 Twitter 很难扩展?

- 全球范围扩展的 10 个 eBay 秘密

- BuddyPoke 如何使用 Google App Engine 在 Facebook 上扩展

- 《 FarmVille》如何扩展以每月收获 7500 万玩家

- Twitter 计划分析 1000 亿条推文

- MySpace 如何与 100 万个并发用户一起测试其实时站点

- FarmVille 如何扩展-后续

- Justin.tv 的实时视频广播架构

- 策略:缓存 404 在服务器时间上节省了洋葱 66%

- Poppen.de 建筑

- MocoSpace Architecture-一个月有 30 亿个移动页面浏览量

- Sify.com 体系结构-每秒 3900 个请求的门户

- 每月将 Reddit 打造为 2.7 亿页面浏览量时汲取的 7 个教训

- Playfish 的社交游戏架构-每月有 5000 万用户并且不断增长

- 扩展 BBC iPlayer 的 6 种策略

- Facebook 的新实时消息系统:HBase 每月可存储 135 亿条消息

- Pinboard.in Architecture-付费玩以保持系统小巧

- BankSimple 迷你架构-使用下一代工具链

- Riak 的 Bitcask-用于快速键/值数据的日志结构哈希表

- Mollom 体系结构-每秒以 100 个请求杀死超过 3.73 亿个垃圾邮件

- Wordnik-MongoDB 和 Scala 上每天有 1000 万个 API 请求

- Node.js 成为堆栈的一部分了吗? SimpleGeo 说是的。

- 堆栈溢出体系结构更新-现在每月有 9500 万页面浏览量

- Medialets 体系结构-击败艰巨的移动设备数据

- Facebook 的新实时分析系统:HBase 每天处理 200 亿个事件

- Microsoft Stack 是否杀死了 MySpace?

- Viddler Architecture-每天嵌入 700 万个和 1500 Req / Sec 高峰

- Facebook:用于扩展数十亿条消息的示例规范架构

- Evernote Architecture-每天有 900 万用户和 1.5 亿个请求

- TripAdvisor 的短

- TripAdvisor 架构-4,000 万访客,200M 动态页面浏览,30TB 数据

- ATMCash 利用虚拟化实现安全性-不变性和还原

- Google+是使用您也可以使用的工具构建的:闭包,Java Servlet,JavaScript,BigTable,Colossus,快速周转

- 新的文物建筑-每天收集 20 亿多个指标

- Peecho Architecture-鞋带上的可扩展性

- 标记式架构-扩展到 1 亿用户,1000 台服务器和 50 亿个页面视图

- 论文:Akamai 网络-70 个国家/地区的 61,000 台服务器,1,000 个网络

- 策略:在 S3 或 GitHub 上运行可扩展,可用且廉价的静态站点

- Pud 是反堆栈-Windows,CFML,Dropbox,Xeround,JungleDisk,ELB

- 用于扩展 Turntable.fm 和 Labmeeting 的数百万用户的 17 种技术

- StackExchange 体系结构更新-平稳运行,Amazon 4x 更昂贵

- DataSift 体系结构:每秒进行 120,000 条推文的实时数据挖掘

- Instagram 架构:1400 万用户,1 TB 的照片,数百个实例,数十种技术

- PlentyOfFish 更新-每月 60 亿次浏览量和 320 亿张图片

- Etsy Saga:从筒仓到开心到一个月的浏览量达到数十亿

- 数据范围项目-6PB 存储,500GBytes / sec 顺序 IO,20M IOPS,130TFlops

- 99designs 的设计-数以千万计的综合浏览量

- Tumblr Architecture-150 亿页面浏览量一个月,比 Twitter 更难扩展

- Berkeley DB 体系结构-NoSQL 很酷之前的 NoSQL

- Pixable Architecture-每天对 2000 万张照片进行爬网,分析和排名

- LinkedIn:使用 Databus 创建低延迟更改数据捕获系统

- 在 30 分钟内进行 7 年的 YouTube 可扩展性课程

- YouPorn-每天定位 2 亿次观看

- Instagram 架构更新:Instagram 有何新功能?

- 搜索技术剖析:blekko 的 NoSQL 数据库

- Pinterest 体系结构更新-1800 万访问者,增长 10 倍,拥有 12 名员工,410 TB 数据

- 搜索技术剖析:使用组合器爬行

- iDoneThis-从头开始扩展基于电子邮件的应用程序

- StubHub 体系结构:全球最大的票务市场背后的惊人复杂性

- FictionPress:在网络上发布 600 万本小说

- Cinchcast 体系结构-每天产生 1,500 小时的音频

- 棱柱架构-使用社交网络上的机器学习来弄清您应该在网络上阅读的内容

- 棱镜更新:基于文档和用户的机器学习

- Zoosk-实时通信背后的工程

- WordPress.com 使用 NGINX 服务 70,000 req / sec 和超过 15 Gbit / sec 的流量

- 史诗般的 TripAdvisor 更新:为什么不在云上运行? 盛大的实验

- UltraDNS 如何处理数十万个区域和数千万条记录

- 更简单,更便宜,更快:Playtomic 从.NET 迁移到 Node 和 Heroku

- Spanner-关于程序员使用 NoSQL 规模的 SQL 语义构建应用程序

- BigData 使用 Erlang,C 和 Lisp 对抗移动数据海啸

- 分析数十亿笔信用卡交易并在云中提供低延迟的见解

- MongoDB 和 GridFS 用于内部和内部数据中心数据复制

- 每天处理 1 亿个像素-少量竞争会导致大规模问题

- DuckDuckGo 体系结构-每天进行 100 万次深度搜索并不断增长

- SongPop 在 GAE 上可扩展至 100 万活跃用户,表明 PaaS 未通过

- Iron.io 从 Ruby 迁移到 Go:减少了 28 台服务器并避免了巨大的 Clusterf ** ks

- 可汗学院支票簿每月在 GAE 上扩展至 600 万用户

- 在破坏之前先检查自己-鳄梨的建筑演进的 5 个早期阶段

- 缩放 Pinterest-两年内每月从 0 到十亿的页面浏览量

- Facebook 的网络秘密

- 神话:埃里克·布鲁尔(Eric Brewer)谈银行为什么不是碱-可用性就是收入

- 一千万个并发连接的秘密-内核是问题,而不是解决方案

- GOV.UK-不是你父亲的书库

- 缩放邮箱-在 6 周内从 0 到 100 万用户,每天 1 亿条消息

- 在 Yelp 上利用云计算-每月访问量为 1.02 亿,评论量为 3900 万

- 每台服务器将 PHP 扩展到 30,000 个并发用户的 5 条 Rockin'Tips

- Twitter 的架构用于在 5 秒内处理 1.5 亿活跃用户,300K QPS,22 MB / S Firehose 以及发送推文

- Salesforce Architecture-他们每天如何处理 13 亿笔交易

- 扩大流量的设计决策

- ESPN 的架构规模-每秒以 100,000 Duh Nuh Nuhs 运行

- 如何制作无限可扩展的关系数据库管理系统(RDBMS)

- Bazaarvoice 的架构每月发展到 500M 唯一用户

- HipChat 如何使用 ElasticSearch 和 Redis 存储和索引数十亿条消息

- NYTimes 架构:无头,无主控,无单点故障

- 接下来的大型声音如何使用 Hadoop 数据版本控制系统跟踪万亿首歌曲的播放,喜欢和更多内容

- Google 如何备份 Internet 和数十亿字节的其他数据

- 从 HackerEarth 用 Apache 扩展 Python 和 Django 的 13 个简单技巧

- AOL.com 体系结构如何发展到 99.999%的可用性,每天 800 万的访问者和每秒 200,000 个请求

- Facebook 以 190 亿美元的价格收购了 WhatsApp 体系结构

- 使用 AWS,Scala,Akka,Play,MongoDB 和 Elasticsearch 构建社交音乐服务

- 大,小,热还是冷-条带,Tapad,Etsy 和 Square 的健壮数据管道示例

- WhatsApp 如何每秒吸引近 5 亿用户,11,000 内核和 7,000 万条消息

- Disqus 如何以每秒 165K 的消息和小于 0.2 秒的延迟进行实时处理

- 关于 Disqus 的更新:它仍然是实时的,但是 Go 摧毁了 Python

- 关于 Wayback 机器如何在银河系中存储比明星更多的页面的简短说明

- 在 PagerDuty 迁移到 EC2 中的 XtraDB 群集

- 扩展世界杯-Gambify 如何与 2 人组成的团队一起运行大型移动投注应用程序

- 一点点:建立一个可处理每月 60 亿次点击的分布式系统的经验教训

- StackOverflow 更新:一个月有 5.6 亿次网页浏览,25 台服务器,而这一切都与性能有关

- Tumblr:哈希处理每秒 23,000 个博客请求的方式

- 使用 HAProxy,PHP,Redis 和 MySQL 处理 10 亿个请求的简便方法来构建成长型启动架构

- MixRadio 体系结构-兼顾各种服务

- Twitter 如何使用 Redis 进行扩展-105TB RAM,39MM QPS,10,000 多个实例

- 正确处理事情:通过即时重放查看集中式系统与分散式系统

- Instagram 提高了其应用程序的性能。 这是如何做。

- Clay.io 如何使用 AWS,Docker,HAProxy 和 Lots 建立其 10 倍架构

- 英雄联盟如何将聊天扩大到 7000 万玩家-需要很多小兵。

- Wix 的 Nifty Architecture 技巧-大规模构建发布平台

- Aeron:我们真的需要另一个消息传递系统吗?

- 机器:惠普基于忆阻器的新型数据中心规模计算机-一切仍在变化

- AWS 的惊人规模及其对云的未来意味着什么

- Vinted 体系结构:每天部署数百次,以保持繁忙的门户稳定

- 将 Kim Kardashian 扩展到 1 亿个页面

- HappyPancake:建立简单可扩展基金会的回顾

- 阿尔及利亚分布式搜索网络的体系结构

- AppLovin:通过每天处理 300 亿个请求向全球移动消费者进行营销

- Swiftype 如何以及为何从 EC2 迁移到真实硬件

- 我们如何扩展 VividCortex 的后端系统

- Appknox 架构-从 AWS 切换到 Google Cloud

- 阿尔及利亚通往全球 API 的愤怒之路

- 阿尔及利亚通往全球 API 步骤的愤怒之路第 2 部分

- 为社交产品设计后端

- 阿尔及利亚通往全球 API 第 3 部分的愤怒之路

- Google 如何创造只有他们才能创造的惊人的数据中心网络

- Autodesk 如何在 Mesos 上实施可扩展事件

- 构建全球分布式,关键任务应用程序:Trenches 部分的经验教训 1

- 构建全球分布式,关键任务应用程序:Trenches 第 2 部分的经验教训

- 需要物联网吗? 这是美国一家主要公用事业公司从 550 万米以上收集电力数据的方式

- Uber 如何扩展其实时市场平台

- 优步变得非常规:使用司机电话作为备份数据中心

- 在不到五分钟的时间里,Facebook 如何告诉您的朋友您在灾难中很安全

- Zappos 的网站与 Amazon 集成后冻结了两年

- 为在现代时代构建可扩展的有状态服务提供依据

- 细分:使用 Docker,ECS 和 Terraform 重建基础架构

- 十年 IT 失败的五个教训

- Shopify 如何扩展以处理来自 Kanye West 和 Superbowl 的 Flash 销售

- 整个 Netflix 堆栈的 360 度视图

- Wistia 如何每小时处理数百万个请求并处理丰富的视频分析

- Google 和 eBay 关于构建微服务生态系统的深刻教训

- 无服务器启动-服务器崩溃!

- 在 Amazon AWS 上扩展至 1100 万以上用户的入门指南

- 为 David Guetta 建立无限可扩展的在线录制活动

- Tinder:最大的推荐引擎之一如何决定您接下来会看到谁?

- 如何使用微服务建立财产管理系统集成

- Egnyte 体系结构:构建和扩展多 PB 分布式系统的经验教训

- Zapier 如何自动化数十亿个工作流自动化任务的旅程

- Jeff Dean 在 Google 进行大规模深度学习

- 如今 Etsy 的架构是什么样的?

- 我们如何在 Mail.Ru Cloud 中实现视频播放器

- Twitter 如何每秒处理 3,000 张图像

- 每天可处理数百万个请求的图像优化技术

- Facebook 如何向 80 万同时观看者直播

- Google 如何针对行星级基础设施进行行星级工程设计?

- 为 Mail.Ru Group 的电子邮件服务实施反垃圾邮件的猫捉老鼠的故事,以及 Tarantool 与此相关的内容

- The Dollar Shave Club Architecture Unilever 以 10 亿美元的价格被收购

- Uber 如何使用 Mesos 和 Cassandra 跨多个数据中心每秒管理一百万个写入

- 从将 Uber 扩展到 2000 名工程师,1000 个服务和 8000 个 Git 存储库获得的经验教训

- QuickBooks 平台

- 美国大选期间城市飞艇如何扩展到 25 亿个通知

- Probot 的体系结构-我的 Slack 和 Messenger Bot 用于回答问题

- AdStage 从 Heroku 迁移到 AWS

- 为何将 Morningstar 迁移到云端:降低 97%的成本

- ButterCMS 体系结构:关键任务 API 每月可处理数百万个请求

- Netflix:按下 Play 会发生什么?

- ipdata 如何以每月 150 美元的价格为来自 10 个无限扩展的全球端点的 2500 万个 API 调用提供服务

- 每天为 1000 亿个事件赋予意义-Teads 的 Analytics(分析)管道

- Auth0 体系结构:在多个云提供商和地区中运行

- 从裸机到 Kubernetes

- Egnyte Architecture:构建和扩展多 PB 内容平台的经验教训

- 缩放原理

- TripleLift 如何建立 Adtech 数据管道每天处理数十亿个事件

- Tinder:最大的推荐引擎之一如何决定您接下来会看到谁?

- 如何使用微服务建立财产管理系统集成

- Egnyte 体系结构:构建和扩展多 PB 分布式系统的经验教训

- Zapier 如何自动化数十亿个工作流自动化任务的旅程

- Jeff Dean 在 Google 进行大规模深度学习

- 如今 Etsy 的架构是什么样的?

- 我们如何在 Mail.Ru Cloud 中实现视频播放器

- Twitter 如何每秒处理 3,000 张图像

- 每天可处理数百万个请求的图像优化技术

- Facebook 如何向 80 万同时观看者直播

- Google 如何针对行星级基础设施进行行星级工程设计?

- 为 Mail.Ru Group 的电子邮件服务实施反垃圾邮件的猫捉老鼠的故事,以及 Tarantool 与此相关的内容

- The Dollar Shave Club Architecture Unilever 以 10 亿美元的价格被收购

- Uber 如何使用 Mesos 和 Cassandra 跨多个数据中心每秒管理一百万个写入

- 从将 Uber 扩展到 2000 名工程师,1000 个服务和 8000 个 Git 存储库获得的经验教训

- QuickBooks 平台

- 美国大选期间城市飞艇如何扩展到 25 亿条通知

- Probot 的体系结构-我的 Slack 和 Messenger Bot 用于回答问题

- AdStage 从 Heroku 迁移到 AWS

- 为何将 Morningstar 迁移到云端:降低 97%的成本

- ButterCMS 体系结构:关键任务 API 每月可处理数百万个请求

- Netflix:按下 Play 会发生什么?

- ipdata 如何以每月 150 美元的价格为来自 10 个无限扩展的全球端点的 2500 万个 API 调用提供服务

- 每天为 1000 亿个事件赋予意义-Teads 的 Analytics(分析)管道

- Auth0 体系结构:在多个云提供商和地区中运行

- 从裸机到 Kubernetes

- Egnyte Architecture:构建和扩展多 PB 内容平台的经验教训