1、准备docker镜像

```

docker pull prom/prometheus:v2.14.0

docker tag 7317640d555e harbor.od.com/infra/prometheus:v2.14.0

docker push harbor.od.com/infra/prometheus:v2.14.0

```

准备目录

```

mkdir /data/k8s-yaml/prometheus-server

cd /data/k8s-yaml/prometheus-server

```

准备rbac资源清单

cat rbac.yaml

```

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

addonmanager.kubernetes.io/mode: Reconcile

kubernetes.io/cluster-service: "true"

name: prometheus

namespace: infra

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

addonmanager.kubernetes.io/mode: Reconcile

kubernetes.io/cluster-service: "true"

name: prometheus

rules:

- apiGroups:

- ""

resources:

- nodes

- nodes/metrics

- services

- endpoints

- pods

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- configmaps

verbs:

- get

- nonResourceURLs:

- /metrics

verbs:

- get

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

addonmanager.kubernetes.io/mode: Reconcile

kubernetes.io/cluster-service: "true"

name: prometheus

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: prometheus

subjects:

- kind: ServiceAccount

name: prometheus

namespace: infra

```

cat dp.yaml

```

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

annotations:

deployment.kubernetes.io/revision: "5"

labels:

name: prometheus

name: prometheus

namespace: infra

spec:

progressDeadlineSeconds: 600

replicas: 1

revisionHistoryLimit: 7

selector:

matchLabels:

app: prometheus

strategy:

rollingUpdate:

maxSurge: 1

maxUnavailable: 1

type: RollingUpdate

template:

metadata:

labels:

app: prometheus

spec:

containers:

- name: prometheus

image: harbor.od.com/infra/prometheus:v2.14.0

imagePullPolicy: IfNotPresent

command:

- /bin/prometheus

args:

- --config.file=/data/etc/prometheus.yml

- --storage.tsdb.path=/data/prom-db

- --storage.tsdb.min-block-duration=10m

- --storage.tsdb.retention=72h

- --web.enable-lifecycle

ports:

- containerPort: 9090

protocol: TCP

volumeMounts:

- mountPath: /data

name: data

resources:

requests:

cpu: "1000m"

memory: "1.5Gi"

limits:

cpu: "2000m"

memory: "3Gi"

imagePullSecrets:

- name: harbor

securityContext:

runAsUser: 0

serviceAccountName: prometheus

volumes:

- name: data

nfs:

server: hdss-6.host.com

path: /data/nfs-volume/prometheus

```

cat svc.yaml

```

apiVersion: v1

kind: Service

metadata:

name: prometheus

namespace: infra

spec:

ports:

- port: 9090

protocol: TCP

targetPort: 9090

selector:

app: prometheus

```

cat ingress.yaml

```

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

annotations:

kubernetes.io/ingress.class: traefik

name: prometheus

namespace: infra

spec:

rules:

- host: prometheus.od.com

http:

paths:

- path: /

backend:

serviceName: prometheus

servicePort: 9090

```

这里用到一个域名`prometheus.od.com`,添加解析:

```

vi /var/named/od.com.zone

prometheus A 10.4.7.10

systemctl restart named

```

准备目录和证书

```

mkdir -p /data/nfs-volume/prometheus/etc

mkdir -p /data/nfs-volume/prometheus/prom-db

cd /data/nfs-volume/prometheus/etc/

# 拷贝配置文件中用到的证书:

cp /opt/certs/ca.pem ./

cp /opt/certs/client.pem ./

cp /opt/certs/client-key.pem ./

```

创建prometheus配置文件

```

cat >/data/nfs-volume/prometheus/etc/prometheus.yml <<'EOF'

global:

scrape_interval: 15s

evaluation_interval: 15s

scrape_configs:

- job_name: 'etcd'

tls_config:

ca_file: /data/etc/ca.pem

cert_file: /data/etc/client.pem

key_file: /data/etc/client-key.pem

scheme: https

static_configs:

- targets:

- '10.4.7.12:2379'

- '10.4.7.21:2379'

- '10.4.7.22:2379'

- job_name: 'kubernetes-apiservers'

kubernetes_sd_configs:

- role: endpoints

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- source_labels: [__meta_kubernetes_namespace, __meta_kubernetes_service_name, __meta_kubernetes_endpoint_port_name]

action: keep

regex: default;kubernetes;https

- job_name: 'kubernetes-pods'

kubernetes_sd_configs:

- role: pod

relabel_configs:

- source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_scrape]

action: keep

regex: true

- source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_path]

action: replace

target_label: __metrics_path__

regex: (.+)

- source_labels: [__address__, __meta_kubernetes_pod_annotation_prometheus_io_port]

action: replace

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

target_label: __address__

- action: labelmap

regex: __meta_kubernetes_pod_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_pod_name]

action: replace

target_label: kubernetes_pod_name

- job_name: 'kubernetes-kubelet'

kubernetes_sd_configs:

- role: node

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- source_labels: [__meta_kubernetes_node_name]

regex: (.+)

target_label: __address__

replacement: ${1}:10255

- job_name: 'kubernetes-cadvisor'

kubernetes_sd_configs:

- role: node

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- source_labels: [__meta_kubernetes_node_name]

regex: (.+)

target_label: __address__

replacement: ${1}:4194

- job_name: 'kubernetes-kube-state'

kubernetes_sd_configs:

- role: pod

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_pod_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_pod_name]

action: replace

target_label: kubernetes_pod_name

- source_labels: [__meta_kubernetes_pod_label_grafanak8sapp]

regex: .*true.*

action: keep

- source_labels: ['__meta_kubernetes_pod_label_daemon', '__meta_kubernetes_pod_node_name']

regex: 'node-exporter;(.*)'

action: replace

target_label: nodename

- job_name: 'blackbox_http_pod_probe'

metrics_path: /probe

kubernetes_sd_configs:

- role: pod

params:

module: [http_2xx]

relabel_configs:

- source_labels: [__meta_kubernetes_pod_annotation_blackbox_scheme]

action: keep

regex: http

- source_labels: [__address__, __meta_kubernetes_pod_annotation_blackbox_port, __meta_kubernetes_pod_annotation_blackbox_path]

action: replace

regex: ([^:]+)(?::\d+)?;(\d+);(.+)

replacement: $1:$2$3

target_label: __param_target

- action: replace

target_label: __address__

replacement: blackbox-exporter.kube-system:9115

- source_labels: [__param_target]

target_label: instance

- action: labelmap

regex: __meta_kubernetes_pod_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_pod_name]

action: replace

target_label: kubernetes_pod_name

- job_name: 'blackbox_tcp_pod_probe'

metrics_path: /probe

kubernetes_sd_configs:

- role: pod

params:

module: [tcp_connect]

relabel_configs:

- source_labels: [__meta_kubernetes_pod_annotation_blackbox_scheme]

action: keep

regex: tcp

- source_labels: [__address__, __meta_kubernetes_pod_annotation_blackbox_port]

action: replace

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

target_label: __param_target

- action: replace

target_label: __address__

replacement: blackbox-exporter.kube-system:9115

- source_labels: [__param_target]

target_label: instance

- action: labelmap

regex: __meta_kubernetes_pod_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_pod_name]

action: replace

target_label: kubernetes_pod_name

- job_name: 'traefik'

kubernetes_sd_configs:

- role: pod

relabel_configs:

- source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_scheme]

action: keep

regex: traefik

- source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_path]

action: replace

target_label: __metrics_path__

regex: (.+)

- source_labels: [__address__, __meta_kubernetes_pod_annotation_prometheus_io_port]

action: replace

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

target_label: __address__

- action: labelmap

regex: __meta_kubernetes_pod_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_pod_name]

action: replace

target_label: kubernetes_pod_name

EOF

```

应用资源配置清单

```

kubectl apply -f http://k8s-yaml.od.com/prometheus-server/rbac.yaml

kubectl apply -f http://k8s-yaml.od.com/prometheus-server/dp.yaml

kubectl apply -f http://k8s-yaml.od.com/prometheus-server/svc.yaml

kubectl apply -f http://k8s-yaml.od.com/prometheus-server/ingress.yaml

```

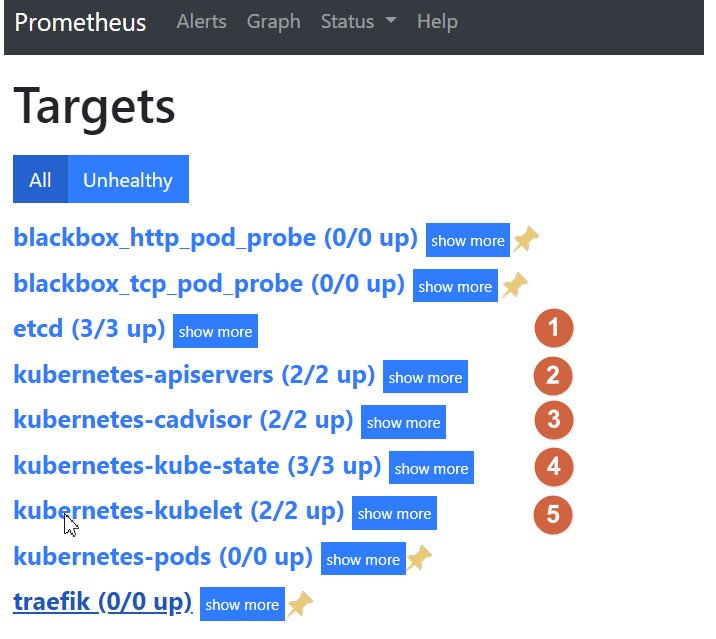

访问http://prometheus.od.com,如果能成功访问的话,表示启动成功

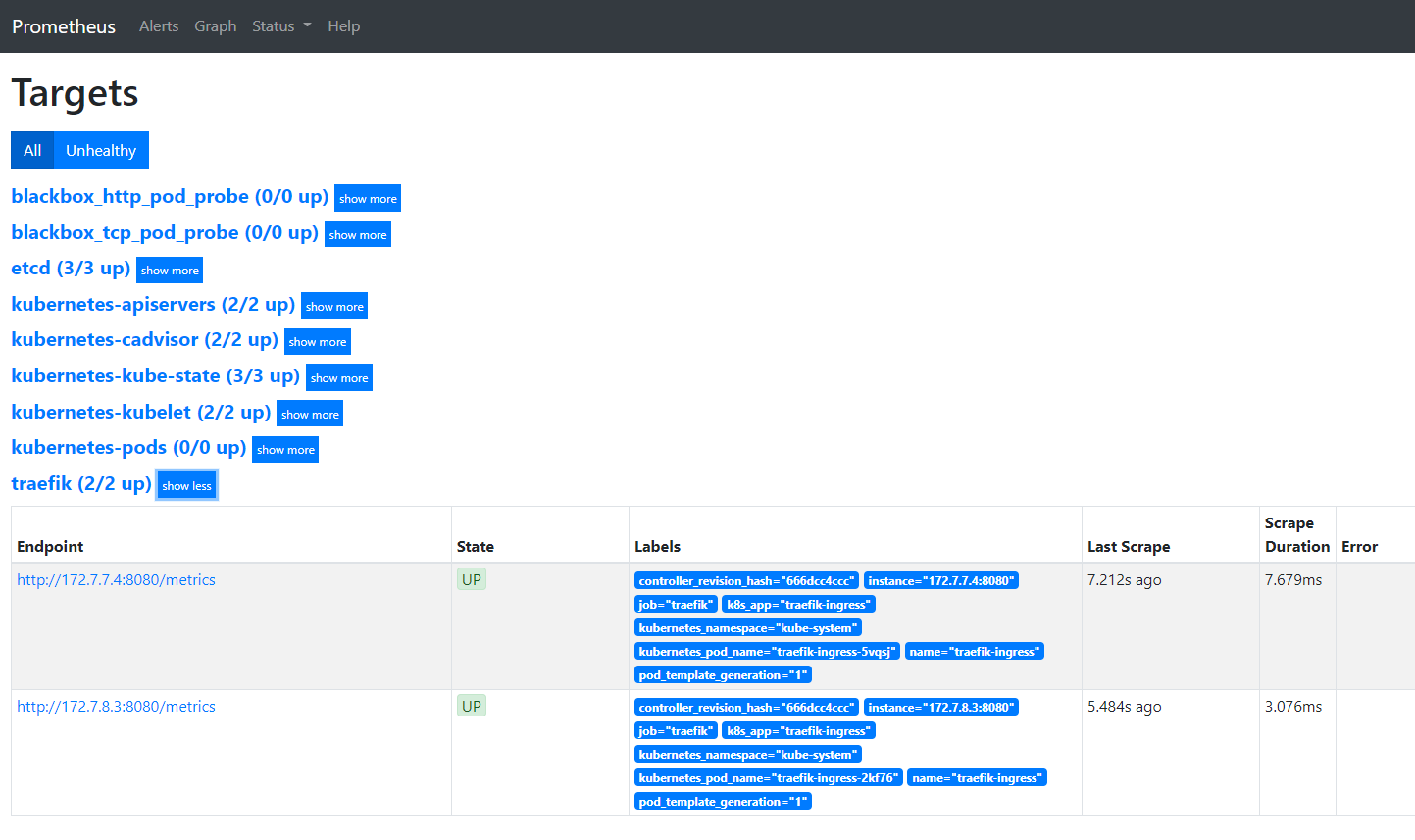

2、让traefik能被自动监控

修改fraefik的yaml文件,跟labels同级,添加annotations配置

vim /data/k8s-yaml/traefik/ds.yaml

```

spec:

template:

metadata:

labels:

k8s-app: traefik-ingress

name: traefik-ingress

#--------增加内容--------

annotations:

prometheus_io_scheme: "traefik"

prometheus_io_path: "/metrics"

prometheus_io_port: "8080"

#--------增加结束--------

spec:

serviceAccountName: traefik-ingress-controller

```

重新应用配置

```

kubectl delete -f http://k8s-yaml.od.com/traefik/ds.yaml

kubectl apply -f http://k8s-yaml.od.com/traefik/ds.yaml

```

3、用blackbox检测TCP/HTTP服务状态

blackbox是检测容器内服务存活性的,也就是端口健康状态检查,分为tcp和http两种方法

能用http的情况尽量用http,没有提供http接口的服务才用tcp

被检测服务准备

使用测试环境的dubbo服务来做演示,其他环境类似

1. dashboard中开启apollo-portal和test空间中的apollo

2. dubbo-demo-service使用tcp的annotation

3. dubbo-demo-consumer使用HTTP的annotation

添加tcp的annotation

等两个服务起来以后,首先在dubbo-demo-service资源中添加一个TCP的annotation

```

vim /data/k8s-yaml/test/dubbo-demo-server/dp.yaml

......

spec:

......

template:

metadata:

labels:

app: dubbo-demo-service

name: dubbo-demo-service

#--------增加内容--------

annotations:

blackbox_port: "20880"

blackbox_scheme: "tcp"

#--------增加结束--------

spec:

containers:

image: harbor.zq.com/app/dubbo-demo-service:apollo_200512_0746

```

添加http的annotation

接下来在dubbo-demo-consumer资源中添加一个HTTP的annotation:

vim /data/k8s-yaml/test/dubbo-demo-consumer/dp.yaml

```

spec:

template:

metadata:

labels:

app: dubbo-demo-consumer

name: dubbo-demo-consumer

#--------增加内容--------

annotations:

blackbox_path: "/hello?name=health"

blackbox_port: "8080"

blackbox_scheme: "http"

#--------增加结束--------

spec:

containers:

- name: dubbo-demo-consumer

```

4、添加监控jvm信息

dubbo-demo-service和dubbo-demo-consumer都添加下列annotation注解,以便监控pod中的jvm信息

```

vim /data/k8s-yaml/test/dubbo-demo-server/dp.yaml

vim /data/k8s-yaml/test/dubbo-demo-consumer/dp.yaml

annotations:

#....已有略....

prometheus_io_scrape: "true"

prometheus_io_port: "12346"

prometheus_io_path: "/"

```

- 空白目录

- k8s

- k8s介绍和架构图

- 硬件环境和准备工作

- bind9-DNS服务部署

- 私有仓库harbor部署

- k8s-etcd部署

- api-server部署

- 配置apiserver L4代理

- controller-manager部署

- kube-scheduler部署

- node节点kubelet 部署

- node节点kube-proxy部署

- cfss-certinfo使用

- k8s网络-Flannel部署

- k8s网络优化

- CoreDNS部署

- k8s服务暴露之ingress

- 常用命令记录

- k8s-部署dashboard服务

- K8S平滑升级

- k8s服务交付

- k8s交付dubbo服务

- 服务架构图

- zookeeper服务部署

- Jenkins服务+共享存储nfs部署

- 安装配置maven和java运行时环境的底包镜像

- 使用blue ocean流水线构建镜像

- K8S生态--交付prometheus监控

- 介绍

- 部署4个exporter

- 部署prometheus server

- 部署grafana

- alert告警部署

- 日志收集ELK

- 制作Tomcat镜像

- 部署ElasticSearch

- 部署kafka和kafka-manager

- filebeat镜像制作

- 部署logstash

- 部署Kibana

- Apollo交付到Kubernetes集群

- Apollo简介

- 交付apollo-configservice

- 交付apollo-adminservice

- 交付apollo-portal

- k8s-CICD

- 集群整体架构

- 集群安装

- harbor仓库和nfs部署

- nginx-ingress-controller服务部署

- gitlab服务部署

- gitlab服务优化

- gitlab-runner部署

- dind服务部署

- CICD自动化服务devops演示

- k8s上服务日志收集